Last Updated on: 13th June 2024, 08:17 pm

Field-aware Factorization Machines on CUDA.

Today we’re open-sourcing CUDA FFM – our tool for very fast FFM training and inference.

You can expect 50-70x speed up in training (comparing to the CPU implementation) and 5-10x speed up in inference (compaing to non-AVX-optimized implementation).

What’s inside?

- very fast FFM trainer that trains FFM model using GPU

- very fast FFM prediction C++ library (using CPU)

- Java bindings to that library (via JNI)

- few dataset management utils (splitting, shuffling, conversion)

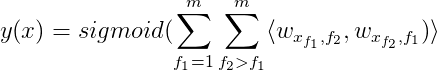

Field-aware Factorization Machines (FFM) is a machine learning model described by the following equation:

Checkout out the original paper for the details or our README for a quick summary.