Last Updated on: 21st June 2024, 11:55 am

Abstract

In a developer’s daily work with the cloud, there is often a need to authenticate to cloud resources and services. There are many ways to obtain authentication credentials to the cloud, but some are more secure and preferred than others.

In this article, I will show you how to gain access to Google Cloud Platform services safely in various contexts of application operation.

The examples in this article will be written in the Python programming language, but other languages have very similar authentication logic, so the concepts shown will also apply to other languages.

You should already have Google Cloud CLI installed. If you don’t have it, use this guide to install it.

Authentication of the application to Google Cloud services

In the examples presented, we will always try to authenticate using the default() function from the google.auth package. In many Google Cloud libraries, it is executed by default, so by providing the application with appropriate conditions, we can use Google Cloud APIs without any additional problems. The above function’s work is very well documented, but to summarize what the authentication path looks like:

- If the GOOGLE_APPLICATION_CREDENTIALS environment variable is set and points to a valid JSON file with a private key to the service account, the credentials will be loaded from this file and returned.

- If Google Cloud SDK is installed and application default credentials are set, they will be loaded and returned.

- If the app runs on Google App Engine (first generation), credentials will be returned from the App Identity Service.

- If the application runs on Compute Engine, Cloud Run, App Engine flexible environment, or App Engine standard environment (second generation), the credentials will be returned from Metadata Service.

- If no credentials are found, DefaultCredentialsError will be raised.

We can often find examples of authentication using JSON files with private keys to service accounts. However, their use carries a significant risk (especially high for keys with a very long or infinite validity date). If an unauthorized person obtains such a file, they have access to our cloud resources, which may result in:

- Data breach.

- Privilege escalation.

- Financial loss.

- Reputation damage.

- Compliance and GDPR/CCPA violations.

- Unavailability of your services.

Working with such files requires developers to be particularly careful regarding potential key theft or accidental exposure in public (e.g., code repository). Such files, for example, should not be passed between users, copied to temporary locations, compiled into program binaries, embedded in docker images, or committed to git repositories. Moreover, policies and mechanisms for their rotation should be provided for such files.

One of the best, safest, and easiest-to-use alternatives to private key files are short-lived access tokens. Compared to the above, they have many advantages:

- Security: short-lived access tokens have a limited lifespan, typically ranging from minutes to hours. This reduces the window of opportunity for attackers to abuse compromised tokens.

- Rotation: short-lived access tokens force frequent rotation. Regularly rotating tokens enhance security by mitigating the risk of unauthorized access due to compromised credentials.

- Granular access control: short-lived tokens can be scoped and restricted to specific resources or actions, providing finer control over access permissions.

- Auditability: the frequent issuance and expiration of short-lived tokens make it easier to track and audit access to resources. This enhances visibility into who accessed what and when, facilitating compliance with security and regulatory requirements.

- Reduced impact of compromise: Short-lived access tokens are usually not written anywhere permanently because it isn’t necessary to do so. Accidentally placing them anywhere in the code, repository, or Docker image is not that big of a risk due to their short expiration date.

Overall, short-lived access tokens offer enhanced security, improved access control, and better auditability compared to long-lived service account keys in JSON files, making them a preferred choice in many security-conscious environments. Later in this article, we will refrain from generating files with keys for service accounts, and we will focus on the use of short-lived tokens in CI and production environments and personal developer credentials in the development environment.

Sample application

Our application will be a simple API to show a country’s current top search trends. For this purpose, we will use publicly available datasets in the BigQuery service. Google Trends – International is a publicly available free dataset that includes the top 25 stories and top 25 rising queries from Google Trends. We will name our GCP project auth-in-various-contexts. First, we need to create a dataset and view in our Google Cloud project that makes it easier to query the latest top trends from our application:

$ bq --location=us mk --dataset sample_application Dataset 'auth-in-various-contexts:sample_application' successfully created. $ bq mk \ --use_legacy_sql=false \ --project_id auth-in-various-contexts \ --view 'SELECT country_name, country_code, region_name, region_code, term FROM `bigquery-public-data.google_trends.international_top_terms` WHERE rank = 1 AND week = ( SELECT MAX(week) FROM `bigquery-public-data.google_trends.international_top_terms`) AND refresh_date = ( SELECT MAX(refresh_date) FROM `bigquery-public-data.google_trends.international_top_terms`)' \ sample_application.top_trends View 'auth-in-various-contexts:sample_application.top_trends' successfully created.

Let’s check our new view:

$ bq query \ --use_legacy_sql=false \ 'SELECT * FROM sample_application.top_trends LIMIT 10' +--------------+--------------+---------------------------------+-------------+-------------+ | country_name | country_code | region_name | region_code | term | +--------------+--------------+---------------------------------+-------------+-------------+ | Poland | PL | Lower Silesian Voivodeship | PL-DS | Ekstraklasa | | Poland | PL | Kuyavian-Pomeranian Voivodeship | PL-KP | Ekstraklasa | | Poland | PL | Opole Voivodeship | PL-OP | Ekstraklasa | | Poland | PL | Podkarpackie Voivodeship | PL-PK | Ekstraklasa | | Poland | PL | Silesian Voivodeship | PL-SL | Ekstraklasa | | Poland | PL | Warmian-Masurian Voivodeship | PL-WN | Ekstraklasa | | Poland | PL | Lubusz Voivodeship | PL-LB | Ekstraklasa | | Poland | PL | Łódź Voivodeship | PL-LD | Ekstraklasa | | Poland | PL | Lublin Voivodeship | PL-LU | Ekstraklasa | | Poland | PL | Pomeranian Voivodeship | PL-PM | Ekstraklasa | +--------------+--------------+---------------------------------+-------------+-------------+

Everything seems to be fine.

Now let’s look at the code of our application written using the Flask framework:

from flask import Flask, jsonify

from google.cloud import bigquery

bq_client = bigquery.Client()

app = Flask(__name__)

query = """

SELECT country_name, country_code, region_name, region_code, term

FROM sample_application.top_trends

WHERE LOWER(country_code) = LOWER(@country_code)

ORDER BY region_name DESC

"""

@app.route("/top-terms/<country_code>")

def hello_world(country_code: str):

job_config = bigquery.QueryJobConfig(

query_parameters=[bigquery.ScalarQueryParameter("country_code", "STRING", country_code)]

)

query_job = bq_client.query(query, job_config=job_config)

results = query_job.result()

if results.total_rows == 0:

return jsonify({"message": "No country with that country code has been found"}), 404

return jsonify({"results": [dict(row) for row in results]})

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)It is a simple API exposing one endpoint that allows you to query top queries by country code. This API uses the previously created view in Big Query. What’s important here is that somewhere during the BigQuery client initialization, the above-mentioned default() function from the google.auth package is called.

The full source code of the application is available at https://github.com/rtbhouse-apps/auth-in-various-contexts. You need to install the required Python packages from requirements.txt before running the application.

Authentication from the developer’s machine (without using Docker)

This will be the simplest case. When our code is running in a local development environment, such as a development workstation, the best option is to use the credentials associated with our personal user account. The installed Google Cloud CLI (gcloud) should be detected by the default() function, and the user’s credentials should be loaded automatically.

First of all, let’s acquire fresh user credentials using Google Cloud CLI:

$ gcloud auth application-default login Your browser has been opened to visit: https://accounts.google.com/[CUT] Credentials saved to file: [/Users/jacek/.config/gcloud/application_default_credentials.json] These credentials will be used by any library that requests Application Default Credentials (ADC).

The above command obtains user access credentials via a web flow and puts them in a well-known location (e.g., $HOME/.config/gcloud/application_default_credentials.json) in your home directory. Any user with access to your file system can use those credentials. Leaking or stealing this file poses a very similar threat to leaking the file with the private key to the service account. It is a good idea to limit the expiration of user credentials as part of your organization’s policy. You can learn how to do it here. In particular, you should avoid situations where user credentials are valid indefinitely. If you no longer need these local credentials, you can revoke them by using the gcloud auth application-default revoke command.

Let’s try to run our application:

$ python -m app * Serving Flask app 'app' * Debug mode: off WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Running on http://127.0.0.1:5000 Press CTRL+C to quit

And use our API and check what the top search terms are in Poland:

$ curl -s http://127.0.0.1:5000/top-terms/pl | jq { "results": [ { "country_code": "PL", "country_name": "Poland", "region_code": "PL-LD", "region_name": "Łódź Voivodeship", "term": "Ekstraklasa" }, { "country_code": "PL", "country_name": "Poland", "region_code": "PL-ZP", "region_name": "West Pomeranian Voivodeship", "term": "Ekstraklasa" }, { "country_code": "PL", "country_name": "Poland", "region_code": "PL-WN", "region_name": "Warmian-Masurian Voivodeship", "term": "Ekstraklasa" }, [CUT] ] }

As you have probably noticed, in all regions of Poland the most popular search term is “Ekstraklasa”, i.e. the highest class of men’s football league competitions in Poland, which is also the highest central level (first league level).

Authentication from the developer’s machine (using Docker)

When we want to use Docker, things start to get a bit more complicated. Inside the container, the installed Google Cloud CLI on the developer’s machine is not visible. Without additional steps, authentication to Google Cloud API will not work automatically.

First of all, let’s build our Docker image (Dockerfile available in the repository):

$ docker build --quiet --tag top-terms-api . sha256:19e15d7a64976fd4abf04e47fd3980527a8eeda0062436a480eb43ab65a59158

And test if it works:

$ docker run \ --interactive \ --tty \ --rm \ --publish 5000:5000 \ top-terms-api [CUT] google.auth.exceptions.DefaultCredentialsError: Your default credentials were not found. To set up Application Default Credentials, see https://cloud.google.com/docs/authentication/external/set-up-adc for more information.

As we expected, the application is not able to obtain credentials to communicate with the Google Cloud API from inside the container.

As a workaround to this problem, we will try to mount a file with user credentials (generated by the gcloud command; look at the above warning) into the container so that our application code has a chance to work without additional modifications. Additionally, we need to set the path to this file in the GOOGLE_APPLICATION_CREDENTIALS environment variable:

$ docker run \ --interactive \ --tty \ --rm \ --publish 5000:5000 \ --volume "$HOME/.config/gcloud/application_default_credentials.json":/gcp/creds.json:ro \ --env GOOGLE_APPLICATION_CREDENTIALS=/gcp/creds.json top-terms-api * Serving Flask app 'app' * Debug mode: off WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Running on all addresses (0.0.0.0) * Running on http://127.0.0.1:5000 * Running on http://192.168.215.2:5000 Press CTRL+C to quit

Everything appears to be working as it should.

Authentication from GitHub Actions CI pipeline (without using Docker)

While checking our application during the Continuous Integration process, we usually choose to run application tests. Let’s take a look at the simplest integration test that performs an actual query on BigQuery, and, therefore, requires authentication to Google Cloud APIs:

from collections.abc import Generator

import pytest

from flask import Flask

from flask.testing import FlaskClient

from app.application import app as base_app

@pytest.fixture()

def app() -> Generator[Flask, None, None]:

app = base_app

app.config.update(

{

"TESTING": True,

}

)

yield app

@pytest.fixture()

def client(app: Flask) -> FlaskClient:

return app.test_client()

def test_response_ok(client: FlaskClient) -> None:

response = client.get("/top-terms/PL")

assert response.status_code == 200

assert "results" in response.json

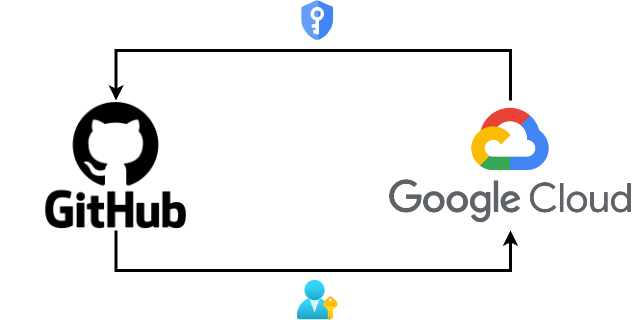

assert len(response.json["results"]) > 0In our CI pipeline, we will use google-github-actions/auth actions for authentication. This action offers several authentication methods, e.g., Workload Identity Federation or using a service account file. The first way is more preferred and safer. Please remember that our goal is to NOT use files with private keys for service accounts.

Workload Identity Federation (WIF) is a concept in cloud computing and identity management that enables applications and services running in cloud environments to securely access resources across different cloud providers or environments, without needing to manage separate sets of credentials for each environment.

Using Workload Identity Federation, workloads that run on GitHub Actions can exchange their OIDC (OpenID Connect) GitHub credentials for short-lived ID tokens received from Google Cloud Security Token Service. The Google-recommended setup directly authenticates GitHub Actions to Google Cloud without intermediate service accounts or keys. To use WIF, we need to set up and configure it by creating a Workload Identity Pool (entity that lets you manage external identities) and Workload Identity Provider (an entity that describes a relationship between Google Cloud and GitHub as a identity provider):

# Create workload identity pool $ gcloud iam workload-identity-pools create github-wif-pool --location="global" Created workload identity pool [github-wif-pool]. # Create provider for our new pool (for a single repository rtbhouse-apps/auth-in-various-contexts) $ gcloud iam workload-identity-pools providers create-oidc "github-wif" \ --project=auth-in-various-contexts \ --location="global" \ --workload-identity-pool="github-wif-pool" \ --display-name="My GitHub repo Provider" \ --attribute-mapping="google.subject=assertion.sub,attribute.actor=assertion.actor,attribute.repository=assertion.repository,attribute.repository_owner=assertion.repository_owner" \ --attribute-condition="assertion.repository == 'rtbhouse-apps/auth-in-various-contexts'" \ --issuer-uri="https://token.actions.githubusercontent.com" Created workload identity pool provider [github-wif]. # Grant Access from a GitHub Action in specific repository to run BigQuery jobs $ gcloud projects add-iam-policy-binding auth-in-various-contexts \ --role="roles/bigquery.jobUser" \ --member="principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts" Updated IAM policy for project [auth-in-various-contexts]. bindings: - members: -principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts role: roles/bigquery.jobUser [CUT] # Grant Access from a GitHub Action in specific repository to read BigQuery data $ gcloud projects add-iam-policy-binding auth-in-various-contexts \ --role=roles/bigquery.dataViewer \ --member="principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts" Updated IAM policy for project [auth-in-various-contexts]. bindings: - members: - principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts role: roles/bigquery.dataViewer [CUT]

Once we have our WIF setup, we can look at our simple application testing the workflow in GitHub Actions:

name: CI on: [push] jobs: build: runs-on: ubuntu-latest permissions: contents: "read" id-token: "write" steps: - uses: actions/checkout@v4 - name: Set up Python 3.12 uses: actions/setup-python@v5 with: python-version: "3.12" - name: Install dependencies run: | python -m pip install --upgrade pip pip install -r requirements.txt - name: Authenticate to Google Cloud uses: "google-github-actions/auth@v2" with: project_id: "auth-in-various-contexts" workload_identity_provider: "projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/providers/github-wif" - name: Run tests run: | python -m pytest

A successful CI means that GitHub Actions has correctly obtained permissions to query our dataset in BigQuery. The google-github-actions/auth action creates a file with short-living credentials and exports the GOOGLE_APPLICATION_CREDENTIALS environment variable, so it’s automatically detected by the Google Cloud API libraries. This file is deleted when the job is finished.

Authentication from GitHub Actions CI pipeline (using Docker)

Sometimes we need to use Docker during CI, for example, when we have dependencies on other services such as Redis or Postgres, or sometimes we just want to test the application in an isolated container environment.

This time we will also want to build a production Docker image so that we can send it to the Docker image registry and be able to use it later in a production deployment. To log in to the private Docker image registry in Google Artifact Registry, we will need an access token. It is not possible to generate an access token without an intermediate service account with access to the Artifact Registry because it doesn’t support identity federation. So let’s create an account with the appropriate permissions:

# Create a new service account $ gcloud iam service-accounts create github-actions-registry-writer --display-name "GitHub Actions writing to Artifact Registry" Created service account [github-actions-registry-writer]. # Grant the new service account permissions to write to the Docker registry $ gcloud artifacts repositories add-iam-policy-binding docker-apps \ --member=serviceAccount:github-actions-registry-writer@auth-in-various-contexts.iam.gserviceaccount.com \ --role=roles/artifactregistry.writer Updated IAM policy for repository [docker-apps]. bindings: - members: - serviceAccount:github-actions-registry-writer@auth-in-various-contexts.iam.gserviceaccount.com role: roles/artifactregistry.writer etag: BwYVpt5qkMs= version: 1 # Grant permissions to authenticate workloads from GitHub Actions to the new service account $ gcloud iam service-accounts add-iam-policy-binding github-actions-registry-writer@auth-in-various-contexts.iam.gserviceaccount.com \ --role="roles/iam.workloadIdentityUser" \ --member="principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts" Updated IAM policy for serviceAccount [github-actions-registry-writer@auth-in-various-contexts.iam.gserviceaccount.com]. bindings: - members: - principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts role: roles/iam.workloadIdentityUser etag: BwYVpuDTV_o= version: 1

To use Docker, our workflow needs a few changes. First of all, we have to mount the credentials file (generated by the google-github-actions/auth action) to the Docker container:

[CUT] - name: Run tests run: | docker run --rm --volume "${{ steps.gcp-auth.outputs.credentials_file_path }}":/gcp/creds.json:ro \ --env GOOGLE_APPLICATION_CREDENTIALS=/gcp/creds.json ${{ env.DOCKER_IMAGE }}:${{ env.TEST_TAG }} pytest

We will also create a separate job for building a production Docker image:

[CUT] build-push-docker: name: Build and push Docker image runs-on: ubuntu-latest needs: ci steps: - name: Checkout code uses: actions/checkout@v4 - name: Authenticate to Google Cloud with Service Account id: gcp-auth-with-service-account uses: "google-github-actions/auth@v2" with: token_format: "access_token" project_id: "auth-in-various-contexts" workload_identity_provider: "projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/providers/github-wif" service_account: "github-actions-registry-writer@auth-in-various-contexts.iam.gserviceaccount.com" - name: Set up Docker Buildx uses: docker/setup-buildx-action@v3 - name: Login to Docker Hub uses: docker/login-action@v3 with: registry: europe-west4-docker.pkg.dev username: "oauth2accesstoken" password: "${{ steps.gcp-auth-with-service-account.outputs.access_token }}" - name: "Set docker image metadata" id: "docker-metadata" uses: "docker/metadata-action@v5" with: images: | ${{ env.DOCKER_IMAGE }} tags: | type=ref,event=branch type=sha - name: Build and load docker image uses: docker/build-push-action@v5 with: push: true tags: ${{ steps.docker-metadata.outputs.tags }} labels: ${{ steps.docker-metadata.outputs.labels }}

Our workflow now consists of two jobs:

- CI job—that builds and loads the Docker image. Here, we authenticate directly, bypassing the service account so we are able to run tests using BigQuery.

- Build and push Docker image—which builds the production Docker image. Here, we authenticate with an intermediate service account so that we can log into the private Docker image registry.

The full source of CI workflow with Docker is available here.

A word of warning: in a production environment, you should have a separate Dockerfile or multi-stage Dockerfile so that the production Docker image does not contain development packages or test code. Also, the API itself should be deployed using the proper production server e.g., Gunicorn, not the development server. For the sake of simplicity, these things have been omitted from the demonstration.

Authentication from Kubernetes workloads

Assuming that we deploy our application in GKE, the preferred way to securely authenticate Kubernetes applications to the Google Cloud API is using the Workload Identity Federation for GKE. The Workload Identity Federation for GKE lets us use IAM policies to grant Kubernetes workloads in our GKE cluster access to specific Google Cloud APIs. When we do this correctly, our application will be able to automatically obtain a short-lived token for the IAM service account that the Pod is configured to impersonate from the Kubernetes metadata server without any changes in code. Tokens obtained from Kubernetes metadata server are valid for one hour and are refreshed upon expiration. Please note that the Workload Identity Federation for GKE must be enabled on a GKE cluster for this to work.

First of all, we need to create a new Kubernetes service account for our workload:

$ kubectl create serviceaccount top-terms-api serviceaccount/top-terms-api created

Now let’s assign appropriate roles directly on our Kubernetes service account:

$ gcloud projects add-iam-policy-binding projects/auth-in-various-contexts \ --role=roles/bigquery.jobUser \ --member=principal://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/auth-in-various-contexts.svc.id.goog/subject/ns/default/sa/top-terms-api Updated IAM policy for project [auth-in-various-contexts]. bindings: - members: - principal://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/auth-in-various-contexts.svc.id.goog/subject/ns/default/sa/top-terms-api - principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts role: roles/bigquery.jobUser [CUT] $ gcloud projects add-iam-policy-binding projects/auth-in-various-contexts \ --role=roles/bigquery.dataViewer \ --member=principal://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/auth-in-various-contexts.svc.id.goog/subject/ns/default/sa/top-terms-api Updated IAM policy for project [auth-in-various-contexts]. bindings: - members: - principal://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/auth-in-various-contexts.svc.id.goog/subject/ns/default/sa/top-terms-api - principalSet://iam.googleapis.com/projects/1067231456173/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/rtbhouse-apps/auth-in-various-contexts role: roles/bigquery.dataViewer [CUT]

Then, let’s create a pod with this service account and previously built Docker image:

$ kubectl apply -f - <<EOF apiVersion: v1 kind: Pod metadata: name: top-terms-api namespace: default spec: serviceAccountName: top-terms-api containers: - name: api image: europe-west4-docker.pkg.dev/auth-in-various-contexts/docker-apps/top-terms-api:main resources: requests: cpu: 200m memory: 512Mi EOF pod/top-terms-api created

Now we can forward port 5000 from our new pod on Kubernetes to our machine:

$ kubectl port-forward pods/top-terms-api 5000:5000 Forwarding from 127.0.0.1:5000 -> 5000 Forwarding from [::1]:5000 -> 5000 Handling connection for 5000

And test it:

curl -s http://127.0.0.1:5000/top-terms/pl | jq { "results": [ { "country_code": "PL", "country_name": "Poland", "region_code": "PL-LD", "region_name": "Łódź Voivodeship", "term": "Ekstraklasa" }, { "country_code": "PL", "country_name": "Poland", "region_code": "PL-ZP", "region_name": "West Pomeranian Voivodeship", "term": "Ekstraklasa" }, { "country_code": "PL", "country_name": "Poland", "region_code": "PL-WN", "region_name": "Warmian-Masurian Voivodeship", "term": "Ekstraklasa" }, [CUT] ] }

Everything works as it should.

Wrapping up

Using short-lived access tokens in the cloud is crucial for security. They minimize the risk of unauthorized access by limiting the window of opportunity for attackers, providing greater control and visibility over access, and ensuring adaptability to dynamic cloud environments. When choosing an authentication method for cloud services, we should always consider whether the chosen method is the most secure. Files with private keys for service accounts should be used as a last resort if other methods are not possible, and even then, you should remember about their security and periodic rotation.